- cross-posted to:

- technology@lemmy.ml

- cross-posted to:

- technology@lemmy.ml

The echoes of Y2K resonate in today’s AI landscape as executives flock to embrace the promise of cost reduction through outsourcing to language models.

However, history is poised to repeat itself with a similar outcome of chaos and disillusionment. The misguided belief that language models can replace the human workforce will yield hilarious yet unfortunate results.

this thing is going to take the whole western economy down with it when it crashes

That’s what I’m expecting given that tech stocks are basically propping the whole market up at this point. Once those start crashing there’s gonna be a huge panic.

let the motherfucker burn

let the motherfucker burn

Affordable graphics cards so I can play my games pls

best I can do is a subscription to a game streaming service with 500ms latency

Low spec free online multiplayer gamers stay winning

Remembering when the 750TI was like $130

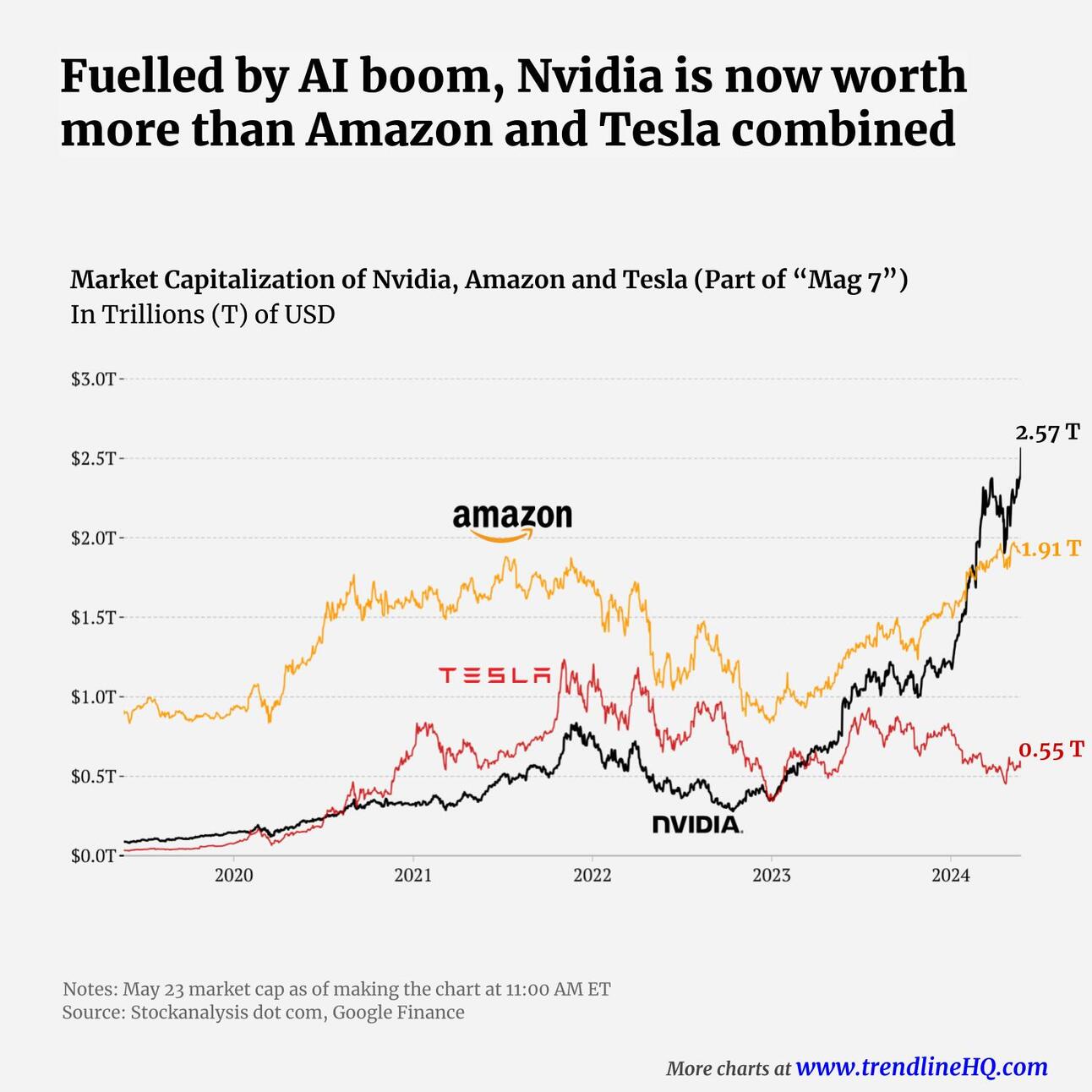

Worth more than Amazon + Tesla combined

The computing services and e-commerce monopoly + the carbon credits scam monopoly

if we had stopped the graphical development of video games at the ps2 era none of this would have happened. we could have had a better world

let a thousand complex dwarf fortress-like simulations bloom. now with polygons!

If I was Illuminati-brained I would believe that Nvidia is illuminati. Two back to back awful fads (crypto and AI) both have been such a boon for them.

These models perform quite poorly when it comes to actually increasing employee productivity. Therefore, these attempts to incorporate it into businesses fall into two camps:

-

The incompetent bazinga business tyrants that are chasing a false promise and aren’t savvy enough to recognize when they’re actually hurting productivity or don’t care because they see more value in marketing themselves as “using AI” than in actually doing better because of it.

-

The competent MBAs and other finance ghouls that know it sucks but know it’s useful for disciplining labor and changing the composition of their workforce to be more “replaceable”, i.e. proletarianized (even if they don’t know that word).

We all know examples of the former. Business owners and middle managers are often incompetent and very full of themselves and make very bazinga decisions.

But the latter is the trend. They’re what the big businesses are listening to, the big monopolies that really control production. They don’t actually care that much if their per-employee productivity goes down a little right now so long as they can rapidly make it up in the form of using a larger, cheaper labor pool and reduced turnover, hiring, and HR costs. But to get that pool you have to change the composition of your workforce: you have to fire the more expensive people (e.g. a senior dev) and use that savings to hire, say, a junior dev and pretend that’s just as good.

This will and does lead to catastrophe of course. Not just economic overall but even at the individual business level, the stuff they’re allowing these LLMs to do are creating huge liabilities like “AIs” that make up company policies and refunds or “AIs” that produce bad code that gets poorly reviewed and makes its way to production because of their “new process” (having fewer developers).

Unfortunately, so many companies are so totally useless and bazinga-ed to their very core that an autogenerating tool really can spit out similar drivel to whatever stupid shit they were going to pay someone to write / do

-

It’s fucking absurd, their last earnings was a 12 billion profit at a 50% profit margin…

For comparison, other monopolies like Microsoft, Meta, Google, and Apple are only about 25-30%

All these GPUs for shitty genAI crap that does almost nothing but make people unemployed and homeless

Did Nvidia engineer the AI boom just as crypto was beginning to flack? No, but it would not be unreasonable to assume to.

My Crypto Apes are really feeling it now! I’m sure LLMs are the future!