Recent years have seen a surge in the popularity of commercial AI products based on generative, multi-purpose AI systems promising a unified approach to building machine learning (ML) models into technology. However, this ambition of “generality” comes at a steep cost to the environment, given the amount of energy these systems require and the amount of carbon that they emit. In this work, we propose the first systematic comparison of the ongoing inference cost of various categories of ML systems, covering both task-specific (i.e. finetuned models that carry out a single task) and ‘general-purpose’ models, (i.e. those trained for multiple tasks). We measure deployment cost as the amount of energy and carbon required to perform 1,000 inferences on representative benchmark dataset using these models. We find that multi-purpose, generative architectures are orders of magnitude more expensive than task-specific systems for a variety of tasks, even when controlling for the number of model parameters. We conclude with a discussion around the current trend of deploying multi-purpose generative ML systems, and caution that their utility should be more intentionally weighed against increased costs in terms of energy and emissions. All the data from our study can be accessed via an interactive demo to carry out further exploration and analysis

Text-based tasks are, all things considered, more energy-efficient than image-based tasks, with image classification requiring less energy (median of 0.0068 kWh for 1,000 inferences) than image generation (1.35 kWh) and, conversely, text generation (0.042 KwH) requiring more than text classification (0.0023 kWh). For comparison, charging the average smartphone requires 0.012 kWh of energy 4 , which means that the most efficient text generation model uses as much energy as 16% of a full smartphone charge for 1,000 inferences, whereas the least efficient image generation model uses as much energy as 950 smartphone charges (11.49 kWh), or nearly 1 charge per image generation, although there is also a large variation between image generation models, depending on the size of image that they generate.

the most carbon-intensive image generation model (stable-diffusion-xl-base-1.0) generates 1,594 grams of 𝐶𝑂2 for 1,000 inferences, which is roughly the equivalent to 4.1 miles driven by an average gasoline-powered passenger vehicle 5 , whereas the least carbon-intensive text generation model (distilbert-base-uncased) generates as much carbon as 0.0006 miles driven by a similar vehicle, i.e. 6,833 times less. This can add up quickly when image generation models such as Dall·E and MidJourney are deployed in user-facing applications and used by millions of users globally

why are we comparing this shit to driving a car instead of to some other computer task? nobody generating images is doing it instead of driving around the block a few times for fun.

streaming a movie is probably less efficient than downloading a file and playing it back offline but covering that doesn’t cash in on the “ai” fad.

i wonder how it compares to playing a videogame or something

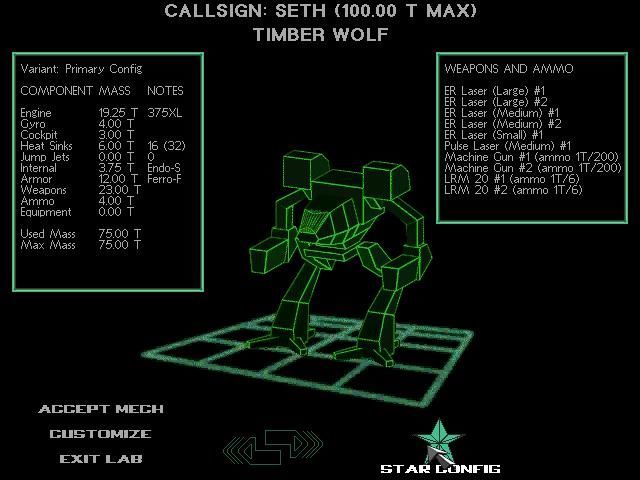

i generate ~20-30 npc portraits per week ish, it tops out my graphics card and puts my cpu at about 75% for about 10 minutes per 9 images

playing almost any reasonably recent aaa game does that for however long i play it for, usually at least two hours per session

AI models are inefficient by necessity, since they’re general purpose. For example, if you ask a LLM based model like ChatGPT to do dissimilar tasks, like let’s say format a table in a specific markdown language and then also to serve as a proofreading tool, the model has to perform inference on the entire architecture to get an output even though the majority of weights are unlikely to be active.

Overall, the commercial AI boom was enabled by the transformer architecture, which can be scaled quite easily but has always been pretty inefficient compared to convolutional approaches. This is why ensemble of expert models, where you have made-for-purpose models doing specific tasks in a pipeline, are a hot topic in order to save on inference time/cost/energy/hardware. There is also a growing body of work on post-transformer architectures, which are supposed to be more data and energy-efficient.

Great, so all those shitty images also worsen the climate crisis

Remember that for the one cool AI generated image you see there are god knows how many garbage ones.

IMO obscuring the energy and computation cost of AI bullshit is the worst thing about the whole AI hype right now.