now I can secretly slow down the trolley and kill less people per second (everyone will still die) 🥰🥰🥰🥰🥰

now I can secretly slow down the trolley and kill less people per second (everyone will still die) 🥰🥰🥰🥰🥰

nnn is inherently fascist

real communists do locktober

Nuclear Throne is such a good ass game

something funny I never connected the dots on is that every single waterfall etc in Skyrim is always exactly on the border between two cells lol

cause the water height is defined per cell so everything below that z coord is just water

must’ve been a pain in the ass to design the rivers with those conditions tbh

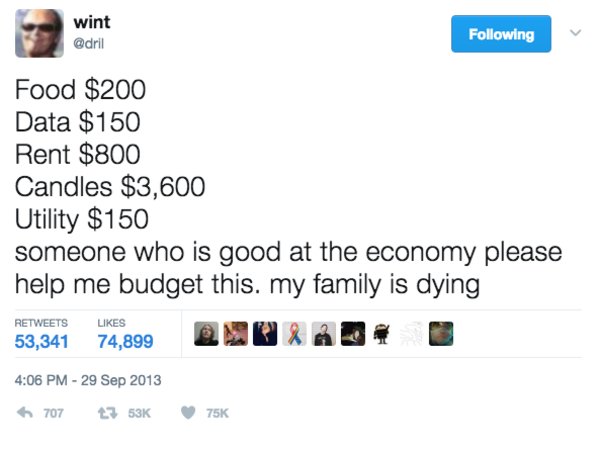

It’s from a dril tweet

Just to note that there will actually be a VM involved. WSL1 was a compatibility layer more similar to Wine, but it didn’t have the best compatibility cause it didn’t have all system calls etc, including notably here missing multilib support. WSL2 is just a VM using Hyper-V and uses a full real Linux kernel, and is necessary here to actually run 32-bit binaries

Sorry for the late response,

I can’t help for PySpark specifically cause I have no experience with it. In general tho you’ll have to install the tooling you need to compile/run the program in WSL, I haven’t used Spark in years so I don’t know specifics but you’ll want to have at least Java and Python installed here. On Ubuntu, you’ll want the packages default-jdk, python3, python3-pip, python3-venv (if you’re using venv), as well as python-is-python3 for convenience. If you’re using venv, you might want to rerun python -m venv env again to make sure it has the files Bash needs, then do source env/bin/activate to activate the venv. You might also have to install pyspark from the Bash shell in case it needs to build anything platform specific. You can set environment variables in ~/.bashrc (It’s the home dir in the Linux VM, not Windows so use the terminal to change this e.g. nano ~/.bashrc or vim ~/.bashrc if you’re familiar with vi) with the shape export VARIABLE=VALUE (put quotes around VALUE if it has spaces etc), then start a new shell to load those (do exec bash to replace the currently running shell with a new process)

From there you should be able to just run the code normally but in WSL instead

You could try WSL, it’s basically just a headless Linux VM so it’s ideal for stuff like this. The terminal itself is just running on windows so no issues with framerate or anything https://learn.microsoft.com/en-us/windows/wsl/install

Wouldn’t that imply the opposite though? AFAIK PCs had already been using independent VRAM by the 7th gen

nah they have other stuff, they’ll talk about how like cryptocurrencies will encourage a move to green energy and magically resolve inefficiencies and shit. “Bitcoin is a store of energy” is a funny phrase they’ll bandy about too

Interestingly, this is the exact same shit cryptocurrency nerds said

ay yo I didn’t know she uploaded a new video

I started using Jerboa a while back and the best feature in this app is that on c/traa posts the comm title just scrolls by for like 10 seconds

And shortly after this revelation, it became clear that there was another big issue: X was changing anything ending in “Twitter.com” to “X.com.”

aaaaaaaaaaAAAAAAAAAAAAAAAAAAA

I mean yeah and “that’s cute honey do you want a cigarette” is basically what geologists told him lmfao

ehhh, the modern inception of the idea was Wegener in the 1910s, but it had no real mechanism for it.

it really wasn’t until the 60s that it had been solidified into a single theory with strong evidence behind it and became widely accepted

wonderous